※ The reference code you could get from here.

Intel provides Intel® Quick Sync Video(QSV) to deal with the video processing : like encoding, decoding, resize..etc. The QSV libraries are contained in Intel® Media SDK.

In the most cases, the users are interested in H264 encoding or trancoding when they are using Intel QSV, of course. But the example code from the tutorial package does not work in streaming.

The root cause is the H264 NAL(Network Abstract Layer) outputted from the media SDK lacking of SPS(Sequence Parameter Set) and PPS(Picture Parameter Set) information. By default, the media SDK would not contain those information into the H264 Nal. However, in the RSTP standard, those information are required. Thus, this post wants to fill the gap : make the Intel® Media SDK able to be used in the streaming cases.

This post continues my previous one, A RTSP Server Based on Qt Framework and LIVE555, the x264 encoder here would be replaced as the Intel® Media SDK's.

零. Implement the QSV H264Encoder class, based on the tutorial simple_3_encode :

This class we named IntelHDGraphicsH264Encoder, a subclass of H264Encoder (to unify the interfaces) :

class IntelHDGraphicsH264Encoder : public H264Encoder { public: static bool IsHardwareSupport(QSize resolution); public: IntelHDGraphicsH264Encoder(void); ~IntelHDGraphicsH264Encoder(void) Q_DECL_OVERRIDE; public : int Init(int width, int height) Q_DECL_OVERRIDE; void Close(void) Q_DECL_OVERRIDE; QQueue<int> Encode(unsigned char *p_frame_data, QQueue<QByteArray> &ref_queue) Q_DECL_OVERRIDE; private: mfxStatus ExtendMfxBitstream(mfxU32 new_size); private: int m_width, m_height; MFXVideoSession m_session; MFXVideoENCODE *m_p_enc; mfxU8 *m_p_surface_buffers; mfxFrameSurface1 **m_pp_encoding_surfaces; mfxU16 m_num_surfaces; mfxBitstream m_mfx_bitsream; private : QMutex m_mutex; };

There is a class static function IsHardwareSupport to check if there is hardware supporting with Intel QSV, and if the resolution is encodable or not. (QSV supports with the width being a multiply of 16, and maximum resolution is 1920x1200)

The class definitions are straight forward, we leave out. But there are two things we should take notice : one is, the QSV encoder input must be NV12; the other is : while the buffer is not enough, it should be resized by the function ExtendMfxBitstream (about line 378):

: else if (MFX_ERR_NOT_ENOUGH_BUFFER == sts) { mfxVideoParam par; memset(&par, 0, sizeof(par)); sts = m_p_enc->GetVideoParam(&par); //printf("require %d KB\r\n", par.mfx.BufferSizeInKB); ExtendMfxBitstream( par.mfx.BufferSizeInKB * 1024); break; } else :

Below it is the integration of IntelHDGraphicsH264Encoder in H264NalFactory.cpp about line 51 :

: m_nal_queue.clear(); #if(1) if( true == IntelHDGraphicsH264Encoder::IsHardwareSupport(resolution)) { m_p_h264_encoder = new IntelHDGraphicsH264Encoder(); qDebug() << "Encoder :: Intel QSV"; } else #endif { m_p_h264_encoder = new X264Encoder(); qDebug() << "Encoder :: X264"; } :

: INTEL_MEDIA_SDK_PATH = $$PWD/../libs/"Intel(R) Media SDK 2019 R1" INCLUDEPATH += $$INTEL_MEDIA_SDK_PATH/include LIBS += $$INTEL_MEDIA_SDK_PATH/lib/x64/libmfx_vs2015.lib LIBS += Advapi32.lib :

And now the encoder works : if we save the output NAL into a file, ffplay or VLCPlayer could play it.

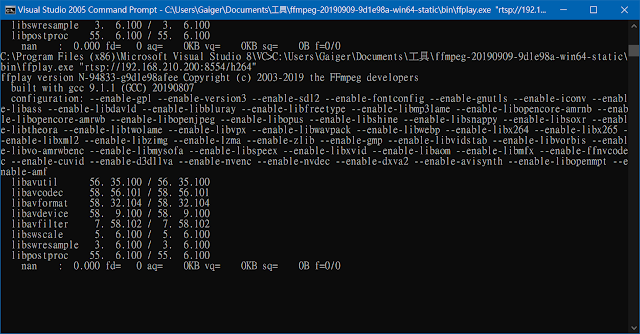

However, the encoder does not work while we use it in streaming:

二. Add the SPS and PPS packets in the streaming.

Refer to here and this example code, we change the encoder code as :

IntelHDGraphicsH264Encoder.h

: mfxBitstream m_mfx_bitsream; #if !defined(_NO_SPSPPS) mfxExtCodingOptionSPSPPS m_spspps_coding_option; mfxU8 m_sps_buffer[128]; mfxU8 m_pps_buffer[128]; #endif :

IntelHDGraphicsH264Encoder.cpp,

Init function, about line 234:

: sts = m_p_enc->Init(¶ms_in); if (MFX_ERR_NONE != sts) { printf("MFXVideoENCODE.Init failed, %d\r\n", sts); return -4; } #if !defined(_NO_SPSPPS) memset(&m_spspps_coding_option, 0, sizeof(mfxExtCodingOptionSPSPPS)); m_spspps_coding_option.Header.BufferId = MFX_EXTBUFF_CODING_OPTION_SPSPPS; m_spspps_coding_option.Header.BufferSz = sizeof(mfxExtCodingOptionSPSPPS); m_spspps_coding_option.SPSBuffer = &m_sps_buffer[0]; m_spspps_coding_option.SPSBufSize = sizeof(m_sps_buffer); m_spspps_coding_option.PPSBuffer = &m_pps_buffer[0]; m_spspps_coding_option.PPSBufSize = sizeof(m_pps_buffer); mfxExtBuffer* extendedBuffers[1]; extendedBuffers[0] = (mfxExtBuffer*) & m_spspps_coding_option; #endif mfxVideoParam video_param; memset(&video_param, 0, sizeof(video_param)); #if !defined(_NO_SPSPPS) video_param.ExtParam = &extendedBuffers[0]; video_param.NumExtParam = 1; #endif sts = m_p_enc->GetVideoParam(&video_param); :

Encode function, about line 414 :

: array = QByteArray((char*)m_mfx_bitsream.Data + m_mfx_bitsream.DataOffset, m_mfx_bitsream.DataLength); #if !defined(_NO_SPSPPS) if( MFX_FRAMETYPE_IDR & m_mfx_bitsream.FrameType) { //printf("MFX_FRAMETYPE_IDR\r\n"); ref_queue.enqueue(QByteArray((char*)m_spspps_coding_option.SPSBuffer, m_spspps_coding_option.SPSBufSize)); h264_nal_size_queue.enqueue( m_spspps_coding_option.SPSBufSize); ref_queue.enqueue(QByteArray((char*)m_spspps_coding_option.PPSBuffer, m_spspps_coding_option.PPSBufSize)); h264_nal_size_queue.enqueue( m_spspps_coding_option.PPSBufSize); } #endif ref_queue.enqueue(array); :

And Now the streaming works.

沒有留言:

張貼留言