※ The code corresponding to this post is

here.

MathJax LaTeX Example Page

CUDA (Compute Unified Device Architecture) is widely used in the Artificial Intelligence (AI) areas for its properties excellently meet deep learning computation framwork: large-scale parallel computing, localized memory accessing pattern, few collective decision and less CPU involving.

However, CUDA does not be only adopted in the AI areas, it is also appropriate to be used in the other large-scale parallel applications. In those cases, the main pain point may not be the computation speed, instead of the memory transmission efficiency. It is possible the bottleneck occuring at the host to/from device memory copy (consuming over 70% of time). If which cases, the benefit from optimizing the computation performance is paltry, we should pay attention on how to improve the transmission speed. But the most CUDA prgramming books emphasize on GPU shared-memory use, warp coalesce accessing, and cache hit-rate improvement, the authors do not spend too much space on the data transmission optimization,

This post will discuss how to optimize the CPU-GPU data transmission. Those tricks are simple, but very useful and beneficial.

We use BLAS SAXPY \( y := A\cdot X + y\) as the example for the demonstration.

零. SAXPY CUDA code

the code is very straightforward.

__global__ static void KernelSAXPY(float *p_input1, float *p_input2,

float value, int length)

{

int i = blockIdx.x * blockDim.x + threadIdx.x;

for (; i < length; i += gridDim.x * blockDim.x)

p_input2[i] = p_input1[i] * value + p_input2[i];

}

#define THREADS_IN_BLOCK (1024)

#define NUM_BLOCKS (512)

cudaEventRecord(p_compute_handle->start_including_copy, stream);

cudaMemcpy(p_dev_input1, p_host_input1, length * sizeof(float),

cudaMemcpyHostToDevice);

cudaMemcpy(p_dev_input2, p_host_input2, length * sizeof(float),

cudaMemcpyHostToDevice);

cudaEventRecord(p_compute_handle->start_excluding_copy, stream);

KernelSAXPY << < THREADS_IN_BLOCK, block_num, 0, stream >> > (

p_dev_input1, p_dev_input2, A, length);

cudaEventRecord(p_compute_handle->stop_excluding_copy, stream);

cudaMemcpy(p_host_input2, p_dev_input2, length * sizeof(float),

cudaMemcpyDeviceToHost);

cudaEventRecord(p_compute_handle->stop_including_copy, stream);

cudaEventSynchronize(p_compute_handle->stop_including_copy);

※ If NUM_BLOCKS is THREADS_IN_BLOCK * ((length + (THREADS_IN_BLOCK - 1)) / THREADS_IN_BLOCK), we could get the best computational performance. But optimized NUM_BLOCKS will make the computational time too short to be measured precisely, thus we fix this value as 512 here.

SAXPX,

data length = 4MB

unit = milli-second

@GTX 1060 3G | excluding copy

elapsed-time | including copy

elasped-time | whole processing time,

measure from CPU |

|---|

| Un-optimized | 0.32 | 11.50 | 11.43 |

It is extermely obvious the most time consuming in the memory copy. If we do not optimize the data transmission, In this case, it is not worthy to use CUDA. Using the CPU SIMD instructions definitely a better solution.

(If you inserested in SIMD, you could refer to

my previous post related to this issue.)

一. Use the pinned memory

As the 2 reference mentioned, piined memory trick improves the PCI-E data transmission :

Host (CPU) data allocations are pageable by default. The GPU cannot access data directly from pageable host memory, so when a data transfer from pageable host memory to device memory is invoked, the CUDA driver must first allocate a temporary page-locked, or “pinned”, host array, copy the host data to the pinned array https://developer.nvidia.com/blog/how-optimize-data-transfers-cuda-cc/

CUDA Driver checks, if the memory range is locked or not and then it will use a different codepath. Locked memory is stored in the physical memory (RAM), so device can fetch it w/o help from CPU (DMA, aka Async copy; device only need list of physical pages). Not-locked memory can generate a page fault on access, and it is stored not only in memory (e.g. it can be in swap), so driver need to access every page of non-locked memory, copy it into pinned buffer and pass it to DMA (Syncronious, page-by-page copy).

/**********************************************************************/

void* MallocPinned(size_t size)

{

void *ptr;

cudaHostAlloc(&ptr, size, cudaHostAllocMapped);

return ptr;

}

/**********************************************************************/

void FreePinned(void *ptr)

{

cudaFreeHost(&ptr);

}

Result :

GTX 1060 3G,

data length = 4MB

unit = milli-second

@GTX 1060 3G | excluding copy

elapsed-time | including copy

elasped-time | whole processing time,

measure from CPU |

|---|

| un-optimized | 0.32 | 11.50 | 11.43 |

| Pinned memory, copy | 0.32 | 4.73 | 4.80 |

The data transmission gets a great improvement.

二. Use zero-copy trick.

Zero-copy means the GPU operates the data on the CPU memory, it leaves out the memory copy, but it will slow the data operation.Thus, In the CUDA process, if there is only one procedure (or called subroutine) involved the CPU data (the input), it is the perfect situation to adopt this trick. SAXPY does be this situation.

Practically, after applying the pinned memory trick, use

cudaHostGetDevicePointer to map the CPU address pointers to the GPU pointers.

:

#if(1)

cudaHostGetDevicePointer(&p_dev_input1, p_host_input1, 0);

cudaHostGetDevicePointer(&p_dev_input2, p_host_input2, 0);

#else

cudaMemcpy(p_dev_input1, p_host_input1, length * sizeof(float),

cudaMemcpyHostToDevice);

cudaMemcpy(p_dev_input2, p_host_input2, length * sizeof(float),

cudaMemcpyHostToDevice);

#endif

:

KernelSAXPY << < THREADS_IN_BLOCK, block_num, 0, stream >> > (

p_dev_input1, p_dev_input2, A, length);

#if(0)

cudaMemcpy(p_host_input2, p_dev_input2, length * sizeof(float),

cudaMemcpyDeviceToHost);

#endf

※ The cost of calling cudaHostGetDevicePointer is paltry, It is not necessary to intentionally reduce the times of its calling.

Result :

GTX 1060 3G,

data length = 4MB

unit = milli-second

@GTX 1060 3G | excluding copy

elapsed-time | including copy

elasped-time | whole processing time,

measure from CPU |

|---|

| Un-optimized | 0.32 | 11.50 | 11.43 |

| Pinned memory, copy | 0.32 | 4.73 | 4.80 |

| Pinned Memory, zerocopy | 2.94 | 2.94 | 3.20 |

※ If NUM_BLOCKS is THREADS_IN_BLOCK * ((length + (THREADS_IN_BLOCK - 1)) / THREADS_IN_BLOCK), the zerocopy performance is tremendously better than the others. In this case, its whole precessing time is less than 0.2 ms.

It shows the overall performance becomes better, but the computation speed slows, as we expected.

三. Asynchronous procedure trick.

For SAXPY, though we have gotten the best performance from the zero-copy trick, we are still curious how much improvement we could get from the procedure synchronization.

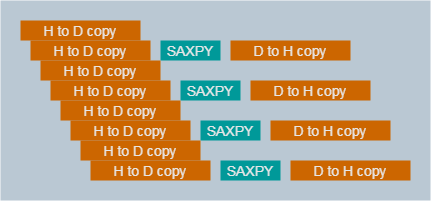

Use Nvidia Visual Profiler to view the pinned-memory case (二.), the profile shows as below :

The procedures are purely sequentialized, for those are not overlapped at all.

For SAXPY is perfect parallelable, the data is able to be diced into several sections and processed individually. It may be possible to overlap the memcpy with the computation, and shorten the overall elasped-time.

Below figure shows the ideal profile while the data sliced into 4 sections :

This behavior is called the asynchronous procedures.

To achieve this goal, we need to do :

1. Dice the data into mutiple sections.

There are 2 ways function-call model we could use : depth-first and breadth-first. For depth-first, we call one stream's all procedures, then call the next stream's ; for breadth-first, we call each stream's zeroth procedure : once all streams's zeroth have been called, then call each stream's first procedures.

甲. Depth-first call

#define ASYNC_SECTION_NUM (4)

unsigned int done_flag = 0;

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

GPUSAXPYAsynchronousCopyHostToDevice(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, A,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

GPUSAXPYAsynchronousCompute(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, A,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

GPUSAXPYAsynchronousCopyDeviceToHost(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, A,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

}

while (1)

{

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

if (0x00 == (0x01 & (done_flag >> i)))

{

if (true == IsComputeDone(cuda_handle_array[i]))

{

done_flag |= (0x1 << i);

if (ASYNC_SECTION_NUM/2 == i)

{

float inlcuding_time, excluding_time;

GetElaspedTime(cuda_handle_array[i],

&inlcuding_time, &excluding_time);

elasped_time_including_copy_in_ms += inlcuding_time;

elasped_time_excluding_copy_in_ms += excluding_time;

}

}

else

{

Sleep(1);

}

}

}

if (all_done_flag == done_flag)

break;

}

GTX 1060 3G,

data length = 4MB

unit = milli-second

@GTX 1060 3G | excluding copy

elapsed-time | including copy

elasped-time | whole processing time,

measure from CPU |

|---|

| Un-optimized | 0.32 | 11.50 | 11.43 |

| Pinned memory, copy | 0.32 | 4.73 | 4.80 |

| Pinned Memory, zerocopy | 2.94 | 2.94 | 3.20 |

Asynchronized,

depth-first, pinned | 0.09 | 1.37 | 5.00 |

The depth-first performance is worse than the sequentialized copy version.

※ The benchmark should be based on the whole processing time : the "excluding/including copy elasped-time" does NOT include the scheduling time. The asynchronized one has the shortest including copy elasped-time, but it is not the best : in the profile, there are the pauses (no data transmission/compuation) while the streams start. As the below figure shows.

乙. Breadth-first call:

unsigned int done_flag = 0;

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

GPUSAXPYAsynchronousCopyHostToDevice(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, 2.0,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

}

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

GPUSAXPYAsynchronousCompute(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, 2.0,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

}

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

GPUSAXPYAsynchronousCopyDeviceToHost(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, 2.0,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

}

while (1)

{

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

if (0x00 == (0x01 & (done_flag >> i)))

:

GTX 1060 3G,

data length = 4MB

unit = milli-second

@GTX 1060 3G | excluding copy

elapsed-time | including copy

elasped-time | whole processing time,

measure from CPU |

|---|

| un-optimized | 0.32 | 11.50 | 11.43 |

| Pinned memory, copy | 0.32 | 4.73 | 4.80 |

| Pinned Memory, zerocopy | 2.94 | 2.94 | 3.20 |

Asynchronized,

depth first, pinned | 0.09 | 1.37 | 5.00 |

Asynchronized,

breadth-first, pinned | 0.09 | 3.88 | 5.00 |

The breadth-first performance is indentical to the depth-first, nothing improved.

Why does it lead the result ? After scrutinizing the code, we could find that: the GPU cores have nothing to do before all the host to device memcpy has been done. It is, the data-hungry brings the low performance.

To solve it, we modify the code as "memcpy breadth-first". That is, through it is the breadth-first strcture, the computation calls just follow the memcpy:

unsigned int done_flag = 0;

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

GPUSAXPYAsynchronousCopyHostToDevice(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, 2.0,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

GPUSAXPYAsynchronousCompute(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, 2.0,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

}

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

GPUSAXPYAsynchronousCopyDeviceToHost(cuda_handle_array[i],

DATA_LENGTH / ASYNC_SECTION_NUM, 2.0,

&data_ptr_array[0][i * DATA_LENGTH / ASYNC_SECTION_NUM],

&data_ptr_array[1][i * DATA_LENGTH / ASYNC_SECTION_NUM]);

}

while (1)

{

for (int i = 0; i < ASYNC_SECTION_NUM; i++) {

if (0x00 == (0x01 & (done_flag >> i)))

GTX 1060 3G,

data length = 4MB

unit = milli-second

@GTX 1060 3G | excluding copy

elapsed-time | including copy

elasped-time | whole processing time,

measure from CPU |

|---|

| un-optimized | 0.32 | 11.50 | 11.43 |

| Pinned memory, copy | 0.32 | 4.73 | 4.80 |

| Pinned Memory, zerocopy | 2.94 | 2.94 | 3.20 |

Asynchronized,

depth-first, pinned | 0.09 | 1.37 | 5.00 |

Asynchronized,

breadth-first, pinned | 0.09 | 3.88 | 5.00 |

Asynchronized,

memcpy breadth-first, pinned | 0.09 | 2.91 | 4.50 |

The result gets better, as we presumed.

Why the depth-first is worse than memcpy bread-first? It is related to the CUDA stream management strategy : The scheduler will not start the other streams while current stream's following procedure depending on current's work. In our case, because the computation procedures depend on the memcpy (of course), the scaleduler would not start the other streams until the copy has been done. It eventually evolves to be sequentialized.

On the other hand, in the memcpy breadth-first, each memcpy does not depends on each other, so the scaleduler does start the other stream while current work has been done, it brings the procedures asynchronized.

The visual profilers shows the evidence.

The depth-first :

There is no asynchronization at the depth-first.

The memcpy breadth-first :

The subsequent streams start while a copy has been done.

Nvidia names the depth-first's behavior as "pseudo-dependency".

※ In my example code, I also provide the example to show how to use 2 CPU-threads to feed/acquire the data to/from the GPU asynchronously.

四. Summary.

For CUDA host-device data transmission :

甲. Pinned memory is able to get the obvious improvement.

乙. Zerocopy get the profound improvement, it requires the pinned memory.

丙. While applying the asynchronization trick, the breadth-first call is necessary to achieve the asynchronous procedures.

丁. Avoid data-hungry in the breadth-first asynchronization : make the GPU cores have work to do while the other stream starts. Practically, each computation call should just follow each memcpy.

沒有留言:

張貼留言